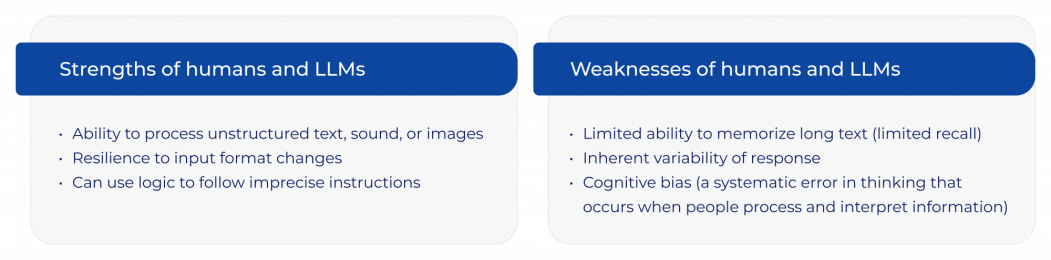

Like humans, large language models (LLMs) produce varying results and sometimes make mistakes. However, this does not stop us from relying on humans, and it should not stop us from relying on LLMs, as we explain below.

The key to building reliable workflows based on LMMs is understanding how these models process data. In many ways, they process data just like humans. This is why the key to successful LLM-based workflows is setting them up in a way that would help a human succeed at the same task.

Why LLMs are Like Humans

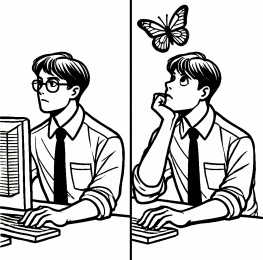

LLMs have more in common with the human brain than with conventional computer programs.

Unlike traditional programs with fixed outputs, LLMs can give variable results and even make mistakes, just like us. Thus, by understanding humans we can understand LLMs.

From Text to Meaning and Back

LLMs work by converting text to meaning. But what is “meaning”?

For LLMs and the neural networks in our heads (also known as our brains), meaning is an array of numbers. The numbers representing the question are transformed to obtain the numbers representing the answer, which are then converted back to text.

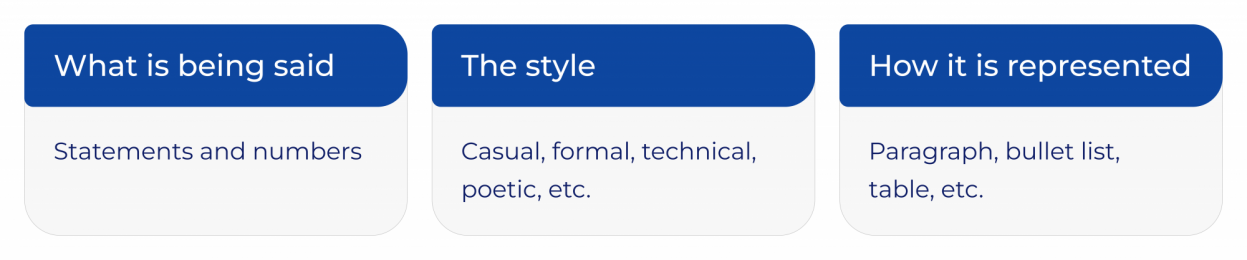

The numbers that represent meaning in LLMs are what machine learning scientists call “latent variables.” Each latent variable captures an individual aspect of the substance, style, and format of the text, which we will collectively call “meaning” in a broad sense.

This broad definition of meaning includes:

However, there are limits to the LLMs’ capability for precise text retention, which we can call limited recall.

Limited Recall (Recall Window vs. Context Window)

Conventional computer programs operate with text and numbers directly and carry no risk of corrupting those. On the other hand, because LLMs map everything into meaning, precise retention of the input is not guaranteed. Understanding this fundamental feature of LLMs is key to using them successfully in business applications.

The recall window is not the same as the model’s context window.

The context window is the maximum amount of text the model can process at once. In modern models, the context window may be hundreds of pages long.

The recall window is the maximum amount of text the model can recite without error, and it is much smaller than the context window.

Just like humans, the model can remember the meaning of an entire 300-page book but would have difficulties in reciting the words precisely for even half a page. Does this disqualify LLMs from business applications?

There is another component of business processes that suffers from the same limitation: humans. For example, a person cannot perfectly memorize a 20-page term sheet and enter the trades from memory.

But they can look at the term sheet and access that data as they work.

Extracting data from security offerings documents, term sheets, or what-if trade requests is a high-value business application of LLMs. However, asking the model to extract data fields from a large input document and insert them into the output results in a significant percentage of errors due to the limitations of recall.

When a large body of text is mapped into meaning, complex data elements, such as payment schedules, are not always relayed with precision. But getting these details exactly right is critical, and humans do not fare well at this task either.

But How Do You Build Reliable LLM-Based Data Extraction Workflows?

There are two common-sense strategies that produce reliable results for humans and LLMs alike (based on our joint research with Alex Daminoff).

Markup: asking the model to specify where each data field is located within the document, and then extracting them by using conventional code. This is similar to using copy-and-paste instead of asking an LLM or a human to retype each data field.

Checklists: keeping track of the data fields captured at each stage to ensure every data field in the input is included in the final output.

Variability of Response

Variability is an inherent part of many trusted numerical algorithms.

While we do not expect variability in conventional data-processing code, it is part of many trusted mathematical algorithms used in capital markets, such as the Monte Carlo method and portfolio optimization. Variability is also inherent in machine learning, and therefore in LLMs.

Variability is also part of human nature. For example, if you ask your employee to draft a document, will they create exactly the same document, word for word, if asked at 10:00 AM and at 10:01 AM? Probably not, even if no additional information was received in the intervening minute.

Will another, equally qualified employee, create exactly the same document, word for word? Most certainly not.

If there is an error in drafting the document, would exactly the same error happen no matter whether the work began at 10:00 AM or 10:01 AM? If the error was caused by a fleeting distraction or forgetfulness, then it would not.

How to Deal with Variability in Humans

Variability should not stop us from relying on humans to perform mission-critical tasks, as we know ways to deal with it:

- Delegate tasks to qualified employees

- Assign tasks that are within human capabilities and can be performed reliably

- Appoint managers to supervise the work and validate the results

How to Deal with Variability in LLMs

Unlike the chat and AI assistant applications of LLMs, where a certain degree of variability is acceptable, LLMs integrated into business workflows must perform in a consistent and reproducible manner.

But, as with humans, we can deal with it in the following ways:

- Assign tasks within the model’s capabilities and use more powerful models for especially complex tasks.

- Recognize the fundamental limitations of LLMs (many of which are shared with humans) and ensure these limitations do not prevent the task from being completed.

- Use the model under continuous human supervision (as a co-pilot) or implement a validation workflow for the completed results.

- Perform the task multiple times with different random seeds (this is something we cannot ask humans to do) and compare the results to detect errors.

Cognitive Bias

As anyone wearing glasses or contact lenses will know, side-by-side comparison is what optometrists use during an eye exam.

|

Rank the clarity of image A from 1 to 10. |

Rank the clarity of image B from 1 to 10. |

Comparing images.

Humans and LLMs tend to amplify distinctions in a side-by-side comparison (distinction bias).

Distinction bias is one of the many forms of cognitive bias, applicable to humans and LLMs alike. In this case, we are taking advantage of the bias. In other cases, our goal is to avoid it.

How We Can Use This Knowledge When Building Business Workflows

Asking a model to assign an absolute score to each document in isolation is unreliable because the scores will vary significantly from one attempt to the next.

However, suppose your goal is relevance ranking:

- Sort news feed articles according to their relevance to the asset you are trading.

- Rank resumés of job candidates, etc.

Taking a pair of documents and asking the model which one should be ranked higher generates accurate and reliable results.

Summary

Just like humans, large language models (LLMs) can produce different results on multiple attempts and occasionally make mistakes. Yet, we trust humans despite their imperfections, and the same approach should apply to LLMs.

The key to building reliable workflows based on LLMs is understanding how LLMs process data. In many ways, LLMs process data just like humans. This is why the key to successful LLM-based workflows is setting them up in a way that would help a human succeed at the same task.

Interested in Learning More about Our AI Developments?

Read our winner’s interview for Best New Technology (AI & Machine Learning) or get insights from the latest Q&A with Alexander Sokol on AI in Quantitative Finance.